Getting started with Lighthouse

Explore how to leverage Google Lighthouse to improve your store's overall performance.

How users experience your website directly impacts relevant ecommerce metrics, such as user session time and sales conversion rate. Adopting the right tools and strategies to understand how shoppers perceive and interact with your website is important to improving your store performance. One of the most cutting-edge tools available for assessing your website performance from the user's point of view is Google Lighthouse.

In this guide, learn how Google Lighthouse can help you to optimize your website.

Lighthouse overview

Lighthouse is a free, open-source tool developed by Google that identifies common issues and provides insights into a website performance, usability, and overall quality.

By auditing a web app URL, Lighthouse generates detailed reports detailing how the page performs based on web standards and developers' best practices. These insights help enhance the website quality and user experience.

Lighthouse audits are grouped into five categories:

- Performance: Evaluates how users perceive and experience a web page by testing key web metrics.

- Accessibility: Assesses the page usability for people with disabilities or impairments.

- SEO: Checks if the page is optimized for search engine results ranking.

- Best practices: Assesses the overall code health of a webpage. Lighthouse also runs checks for best practices in modern web development, focusing on security aspects.

Running Lighthouse audits

You can conduct a Lighthouse audit in different ways: using PageSpeed Insights, Chrome DevTools, the command line, or a Chrome extension.

Although Lighthouse is a powerful tool for inspecting web page quality, it operates only at the URL level. To ensure comprehensive coverage of the web page quality, we strongly recommend testing at least the Home page, a Product Details Page (PDP), and a Collection page of your store.

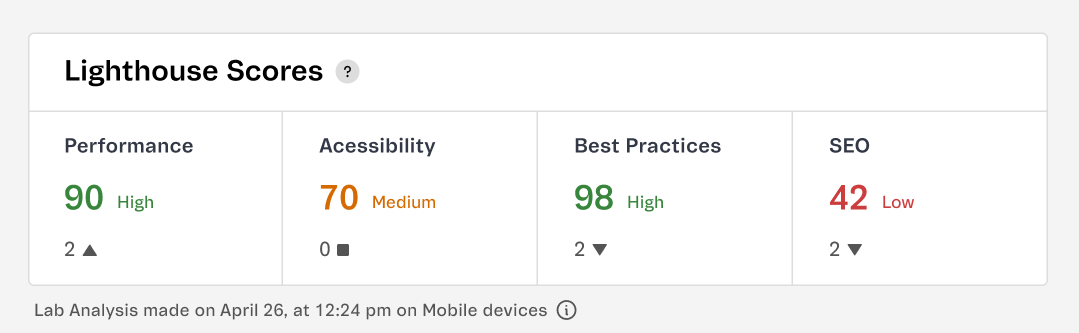

FastStore WebOps - Lighthouse scores

For stores using FastStore, you can run Lighthouse audit using the methods presented in the previous section. You can also streamline your web audits by using the Lighthouse scores tool for the FastStore WebOps app.

Checking these reports helps you keep track of how each PR affects your store performance, SEO, and accessibility.

Lighthouse reports is a native WebOps tool. For more information about using this tool, see the Lighthouse scores documentation.

Performance audit

When you submit a URL to Lighthouse to audit your web page performance, it creates a report with the following information:

- Performance score and metrics: A weighted average of Metrics scores and results of independent metrics that aim to represent the user's perception of performance.

- Opportunities: Suggestions and documentation on how to improve page load speed, with estimated potential savings.

- Diagnostics: Additional guidance that developers can explore to further enhance page performance.

- Passed audits: Suggestions from Opportunities and Diagnostics that have already been implemented on the page.

- Additional information: Details about the sampled data, including the data collection period, devices used for emulation, Chrome version, etc.

In general, only Metrics — not the outcomes of Opportunities and Diagnostics — directly affect the overall Performance score. However, there is an indirect relationship because implementing the suggestions from Opportunities and Diagnostics will likely improve the Metric scores as well.

Performance score and metrics

The Lighthouse Performance score provides an estimated representation of user perception of performance. While a computer may define performance by the time it takes for the entire page to load, this specific metric doesn't always reflect what the user values. From the user's point of view, performance is a perception of how they experience the page loading and how responsive it feels during use.

To ensure Lighthouse metrics are relevant to users, they are structured around a few key questions:

- Is it happening? When the first visual activities occur and the user notices that the page is loading. If the server doesn't respond quickly, the user may feel there is a problem and leave the page.

- Is it useful? When enough valuable content is rendered. This moment can keep the user engaged.

- Is it usable? When the UI seems ready for interaction. This moment can be frustrating if users try to interact with visible content but the page is still processing.

- Is it delightful? The moment after loading that guarantees smooth and lag-free interactions.

Given these four moments during the page load journey, a series of individual metrics were created to reflect what could be relevant for users in each moment. These metrics include the time it takes for the first contentful paint to be displayed and for the page to become interactive. A weighted average of these metrics determines the final Lighthouse Performance score:

| Audit | Weight |

|---|---|

| Total Blocking Time (TBT) | 30% |

| Largest Contentful Paint (LCP) | 25% |

| Cumulative Layout Shift (CLS) | 25% |

| First Contentful Paint (FCP) | 10% |

| Speed Index (SI) | 10% |

The following sections briefly explain each of these metrics. Notice that the Total Blocking Time, Largest Contentful Paint, and Cumulative Layout Shift have higher weights and, consequently, a greater impact on the overall performance score. Therefore, paying close attention to these two metrics is essential.

Due to frequent adjustments to Google's ranking formula, the overall performance score may vary over time. To stay up to date with Lighthouse's latest updates, we recommend visiting the Lighthouse performance scoring article.

When running Lighthouse, you will notice that the metric results follow a color code based on the following ranges:

| Code | Meaning | Range |

|---|---|---|

| ▲ | Poor | 0–49 |

| ■ | Needs improvement | 50–89 |

| ● | Good | 90–100 |

Total Blocking Time (TBT) | 30%

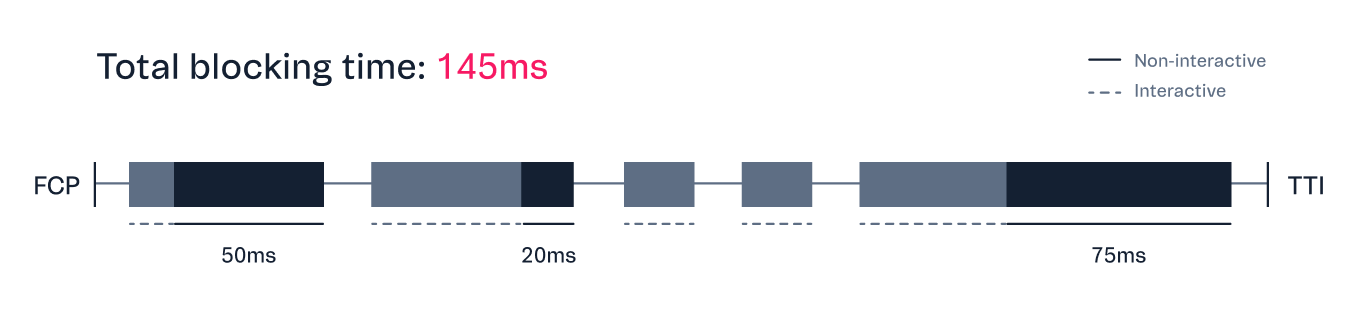

The Total Blocking Time (TBT) is the amount of time between the First Contentful Paint (FCP) and Time to Interactive (TTI) during which user inputs, such as mouse clicks, screen taps, or keyboard presses, are blocked. When a page has a long TBT, the user may notice the delay and perceive the page as sluggish.

User interaction is blocked when a long task blocks the main thread and prevents the browser from responding to other requests. Blocking time starts to count after 50ms. For example, if a function takes 150ms to execute, the blocking time would be 100ms. Therefore, the Total Blocking Time of a page is the sum of the blocking time for each long task that occurs during page load.

See below how Lighthouse classifies TBT times:

| TBT time (milliseconds) | Color-coding |

|---|---|

| Over 600 | ▲ Poor |

| 200 – 600 | ■ Needs improvement |

| 0 – 200 | ● Good |

TBT is mainly affected by the parsing and execution of JavaScript and third-party scripts. Besides removing or reducing JavaScript code, you can improve TBT by splitting long tasks into smaller ones, improving code efficiency, and implementing lazy loading.

For more information about improving TBT, see Google's guidelines.

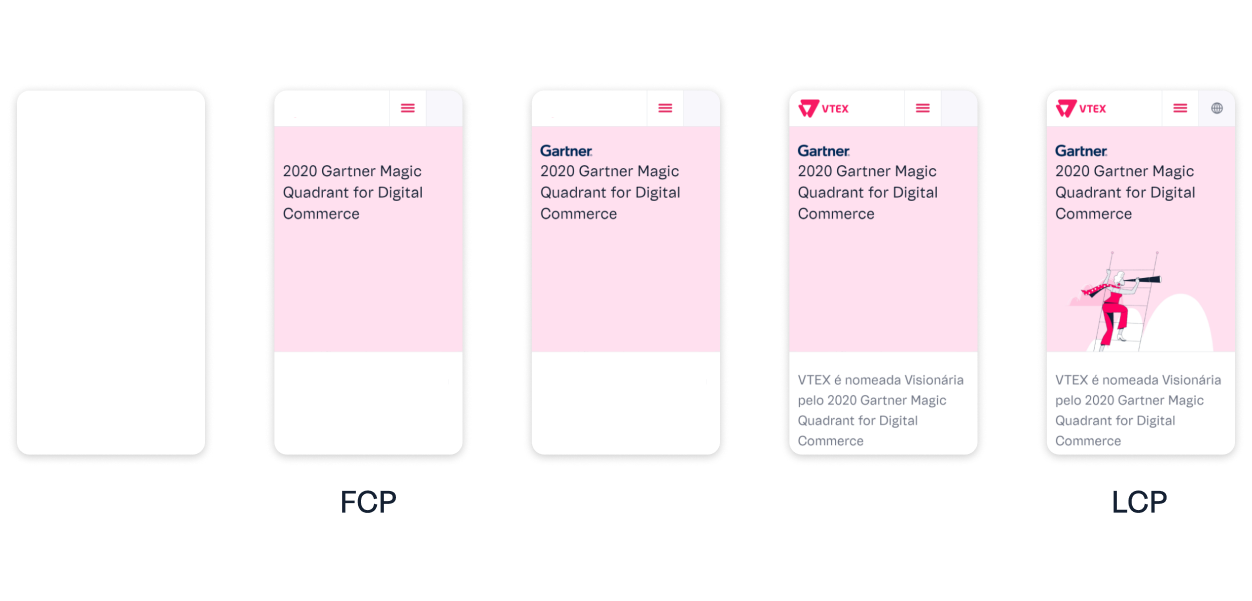

Largest Contentful Paint (LCP) | 25%

Largest Contentful Paint (LCP) measures the time it takes to render the largest visible element on the page within the viewport. Fast LCPs help reassure the user that the page is useful.

| LCP time (seconds) | Color-coding |

|---|---|

| Over 4 | ▲ Poor |

| 2.5–4 | ■ Needs improvement |

| 0–2.5 | ● Good |

The metric only considers the loading time of elements significantly relevant to the user experience, such as:

<img />elements,<image>elements inside an<svg>element.<video>elements.- Elements with a background image loaded via the

url()function. - Block-level elements, such as

<h1>,<h2>,<div>,<ul>,<table>, etc.

LCP is primarily affected by the following factors:

- Long server response times.

- Render-blocking resources, such as CSS stylesheets, font files, and JavaScript scripts.

- Resource size and load time.

- Client-side rendering.

For more information about how to improve LCP, see Google's guidelines.

Cumulative Layout Shift (CLS) | 25%

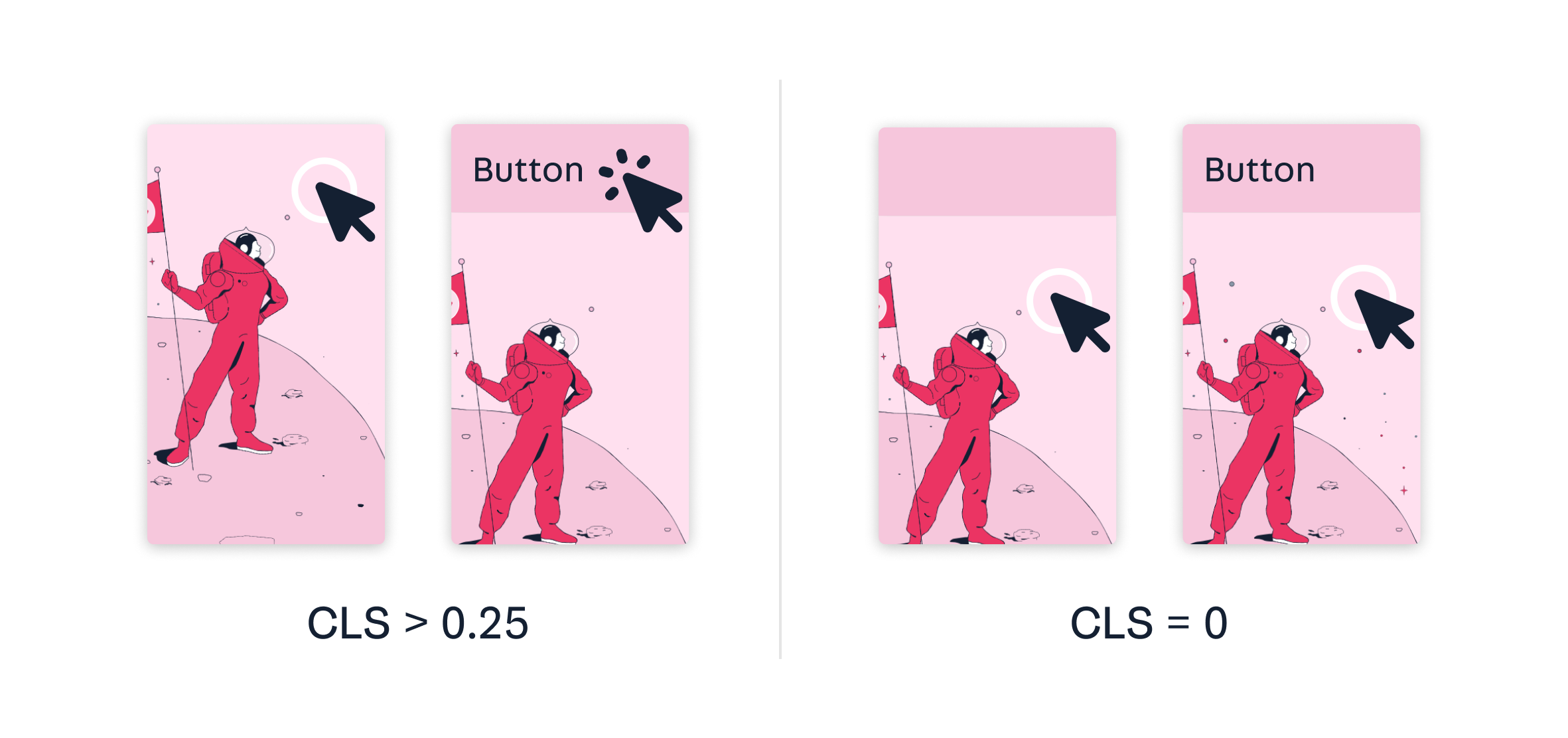

The Cumulative Layout Shift (CLS) metric measures the impact of undesired content shifts that occur during the entire lifespan of a page.

A layout shift occurs when a visible element changes its initial position from one rendered frame to the next. A website with a low CLS has a steady page display without any elements shifting around. A high CLS may annoy users and affect how they interact with the page, causing them to click an unwanted button or link.

| CLS | Color-coding |

|---|---|

| Over 0.25 | ▲ Poor |

| 0.1 – 0.25 | ■ Needs improvement |

| 0 – 0.1 | ● Good |

This metric is mostly affected by images, ads, embeds, iframes without predefined dimensions, and by content dynamically inserted by JavaScript on the client side (e.g., a bar that suddenly appears in the header).

For more information about improving CLS, see Google's guidelines.

First Contentful Paint (FCP) | 10%

First Contentful Paint (FCP) measures the time it takes for the browser to render the first piece of content from the Document Object Model (DOM). Short FCP times mean fast visual activity from the browser. They reassure the user that something is happening during the page load and, therefore, increase the chance of keeping users engaged.

See below how Lighthouse classifies FCP times:

| FCP time (seconds) | Color-coding |

|---|---|

| Over 3 | ▲ Poor |

| 1.8–3 | ■ Needs improvement |

| 0–1.8 | ● Good |

The FCP affects most of the other metrics and serves as a huge red flag if it is taking too long. This metric can be affected by:

- Long server response times.

- Render-blocking resources, such as CSS stylesheets, font files, and JavaScript scripts.

- Script-based elements above the fold.

- Large DOM trees.

- Multiple 301 redirects.

For more information about improving FCP, see Google's guidelines.

Speed Index (SI) | 10%

The Speed Index (SI) indicates how quickly a page's elements become visible in the viewport. The Speed Index is a synthetic value that calculates the visual differences between frames captured during the page load. Until all content is visible, each frame is scored considering its visual completeness. These individual scores are then summed to determine the page's Speed Index.

See below how Lighthouse classifies SI times:

| SI time (seconds) | Color-coding |

|---|---|

| 0ver 5.8 | ▲ Poor |

| 3.4–5.8 | ■ Needs improvement |

| 0–3.4 | ● Good |

The Speed Index is a unified metric that considers many other metrics and audits, which can help you figure out whether your page speed optimizations are working. Generally, most improvements to your page's First Contentful Paint and Largest Contentful Paint are also likely to improve the Speed Index.

For more information about improving SI, see Google's guidelines.

Opportunities

The Opportunities section provides insights that could potentially help your page load faster. The priority level of each opportunity is indicated by the same color coding used for the metrics. High priorities are indicated by red triangles, medium priorities by orange squares, and low priorities by gray circles.

Use the Opportunities section to determine which improvements will benefit your page the most. The more significant the opportunity, the more likely it is to affect your performance score.

Click each opportunity to get detailed information and documentation about fixing the related issues. Also, notice that unless you have a specific reason for focusing on a particular metric, it's usually better to focus on improving your overall performance score.

Diagnostics

The Diagnostics section provides more information about the performance of your application. It indicates the largest contentful paint element on your page, lists the longest tasks on the main thread, and displays the cache lifetime, among other useful details.

Once again, the details presented in this section do not directly affect the performance score but are likely to improve the metric values if considered.

Passed audits

The Passed audits section lists all the audits that the page passed. Once you implement the suggestions from Opportunities and Diagnostics, you can retest your page to see if they now appear in the Passed Audits section.

Additional information

The Additional information section shares the following details about the sampled data:

- Data collection period

- Visit durations

- Devices

- Network connections

- Sample size

- Chrome version